Tutorial on Approximate Bayesian Inference for Linear Regression

Aim and Scope of this Tutorial

This tutorial exemplifies the use of approximate Bayesian inference with both a variational Bayesian (VB) and Markov Chain Monte Carlo (MCMC) approach on a very simple linear regression model. It is aimed at people who already have a basic understanding of probability theory including Bayes’ rule, probability density functions (especially normal and gamma) and expectations and want to understand the inner workings, advantages and disadvantages of VB and MCMC approaches.

There are a lot of tutorials on either approaches but I have not come across a tutorial that explains and describes both methods on the same model. I hope the following documents will fill this gap. I believe that understanding the details of the VB and MCMC approaches in this simple model paves the way towards their application in more complex models. The described concepts are mainly taken from and expanded upon Bishop’s “Pattern Recognition and Machine Learning” (2006).

Introduction

Bayesian inference is a powerful tool to identify a variety of statistical models from which we can make make predictions and quantify the uncertainties we have in those predictions. This uncertainty is expressed in terms of probabilities, in particular through the use of probability density functions (PDF). Throughout this tutorial we will only deal with continuous random variables whose PDF is denoted by $p$, having the property $\int p(x) dx = 1$.

The Basic Model

In this tutorial, we will discuss the following basic linear regression model:

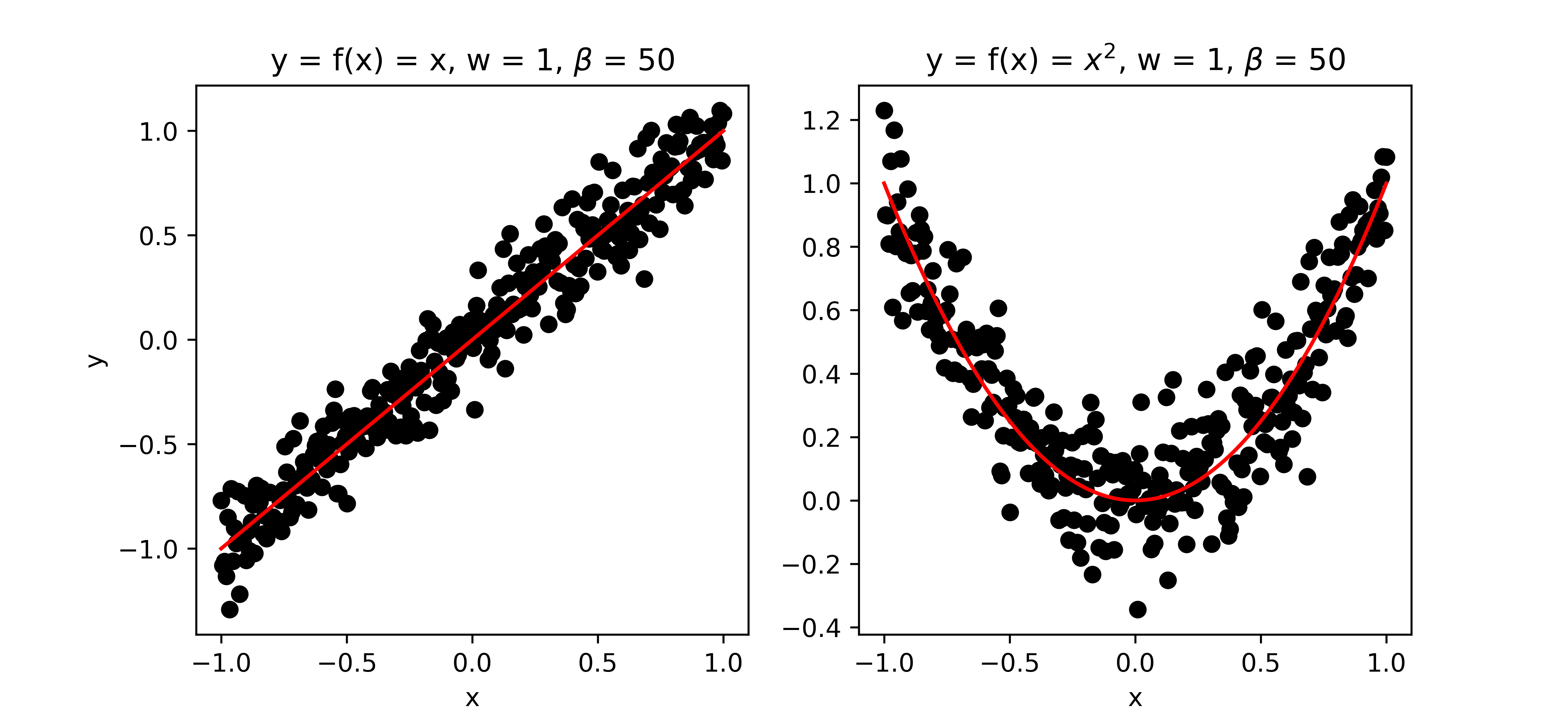

\[y = w f(x) + \varepsilon\] \[\varepsilon \sim \mathcal{N}(0,\beta^{-1})\]The input and output (or target) of the model are $x$ and $y$, respectively, and collectively referred to as data $\mathcal{D}$. The function $f$ can be any continuous function of the input $x$. The model has two unknown parameters that we want to infer from the data $\mathcal{D}$: the slope parameter $w$ and the precision $\beta$ (inverse of the variance) of the measurement noise $\varepsilon$. For the purpose of illustration we will assume the parameter $w$ to be one dimensional, so we can plot the parameter space.

Figure 1: Examples of some data generated by the model.

Bayesian Inference

The goal of Bayesian inference in the context of this model is to infer the posterior distribution $p(w,\beta|\mathcal{D})$ of the unknown parameters given some data. Using Bayes’ rule this posterior can be calculated as follows:

\[p(w,\beta|\mathcal{D})=\frac{L(\mathcal{D}|w,\beta)p(w,\beta)}{P(\mathcal{D})}\]The function $L(\cdot)$ expresses the likelihood of observing the data given the unknown parameters and the PDF $p(w,\beta)$ is the prior distribution over unknown parameters. It encompasses information we have on the unknown parameters before any data is observed. The function $P(\cdot)$ is called marginal likelihood and can be considered as evidence of observing the data given our model. If we would do Bayesian inference on different models, a secondary goal (apart from parameter estimation) is model selection for which the marginal likelihood can be used. $P(\cdot)$ is called marginal likelihood, as it can be calculated by summing over, also known as marginalising out, the unknown parameters from the numerator:

\[P(\mathcal{D})=\int L(\mathcal{D}|w,\beta)p(w,\beta) dw d\beta\]Why do we need approximation?

In practice, i.e. when the model is formulated by differential equations, it becomes infeasible to evaluate the posterior distribution. Quoting Bishop (2006) p. 462:

“In such situations, we need to resort to approximation schemes, and these fall broadly into two classes, according to whether they rely on stochastic or deterministic approximations. Stochastic techniques such as Markov chain Monte Carlo […] have enabled the widespread use of Bayesian methods across many domains. They generally have the property that given infinite computational resource, they can generate exact results, and the approximation arises from the use of a finite amount of processor time. In practice, sampling methods can be computationally demanding, often limiting their use to small-scale problems. Also, it can be difficult to know whether a sampling scheme is generating independent samples from the required distribution.”

”[…] deterministic approximation schemes [such as VB] […] are based on analytical approximations to the posterior distribution, for example by assuming that it factorizes in a particular way or that it has a specific parametric form such as a Gaussian. As such, they can never generate exact results, and so their strengths and weaknesses are complementary to those of sampling methods.

Model (1)-(2) is actually simple enough so so that the posterior distribution and marginal likelihood can be calculated analytically and no approximation is required. For this particular example model, having the analytic solution is very useful as it allows us to compare the ‘true’ solution with the approximate results we get from VB and MCMC.

The exact analytic solution is described in Part 1.

The approximate solution using variational Bayesian is described in Part 2.

The approximated solution using Markov Chain Monte Carlo is described in Part 3.